Configure the latest open source free version (4.5.0) of Hue with the latest HDP (3.1.4) stack

Introduction

Everybody likes to explore and try out the latest and greatest technologies. But, getting hold of these technologies comes with both frustration and pleasure. In my scenario, for some unknown reason, I picked the latest Hue source code from the hue git repository and attempted to make it work for HDP 3.1.4 stack. Hortonworks does not officially support Hue, so it was quite challenging to start from nowhere. This blog is a summary of my learnings from this entirely interesting and challenging task.

I planned this activity as below:

-

Get the latest Hue from gethue.com

-

Install it

-

Pick hue.ini from one of our other working setups and modify it as per the new cluster nodes hostname

-

Start hue service

-

Handover setup to end-users for testing

-

Go home in the evening and sleep tight

But as all administrators know, nothing goes as planned and without new learnings.

1) Get and install the latest Hue

yum install -y git git clone https://github.com/cloudera/hue.git sudo yum install -y ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make mysql mysql-devel openldap-devel python-devel sqlite-devel gmp-devel libtidy maven cd hue make apps

2) Create a hue user and database in MySQL/MariaDB

create database huedb; create user ‘hue’@’localhost’ identified by ‘PASSWORD’; grant all privileges on huedb.* TO ‘hue’@’localhost’; grant user ‘hue’@’%’ identified by ‘PASSWORD’; grant all privileges on huedb.* TO ‘hue’@’%’; create user ‘hue’@’HOSTNAME_HUE_SERVER’ identified by ‘PASSWORD’; grant all privileges on huedb.* TO ‘hue’@’HOSTNAME_HUE_SERVER’ identified by ‘PASSWORD’; flush privileges;

3) Create the hue user on the hue server

useradd hue

4) Update “desktop/conf/pseudo-distributed.ini” file to make it listen on 8000 and use MySQL/MariaDB database

a) Where hue process will listen for request [desktop] http_host=<HUE_SERVER_HOSTNAME> http_port=8000 b) use remote MySQL/MariaDB database [[database]] engine=mysql host=<MYSQL_DB_HOSTNAME> port=<MYSQL_DB_PORT> user=hue password=<hue-PASSWORD> name=hue

5) Configure Hue to load the existing data and create the necessary database tables

build/env/bin/hue syncdb — noinput

6) Start the Hue process and Test

a. Start hue process:

build/env/bin/supervisor

b. Verify whether all goes well :

i. Open the browser and point it to http://<HUE-HOSTNAME>:8000

ii. On the hue system, check the output of “netstat -tulpn | grep 8000”

command. Here, you should see that the hue process is listening on 8000 port for connection.

If you get a login prompt, it means the hue configuration is proper and that it’s properly installed.

Hue Login screen

Hue Login screen

7) Configuring Hue to access Hadoop cluster HDFS, Yarn and Hive service

a. Yarn service

open hue configuration file and update below configuration: [hadoop] [[yarn_clusters]] [[[default]]] resourcemanager_host=<RM_HOST> submit_to=True resourcemanager_api_url=http://<RM_HOST>:8088 proxy_api_url=http://<RM_HOST>:8088 history_server_api_url=http://<RM_HOST>:19888

Note: Verify the port for each of the above services through Ambari and update them accordingly. In Yarn HA, a request to any yarn instance will be redirected to an active resource manager.

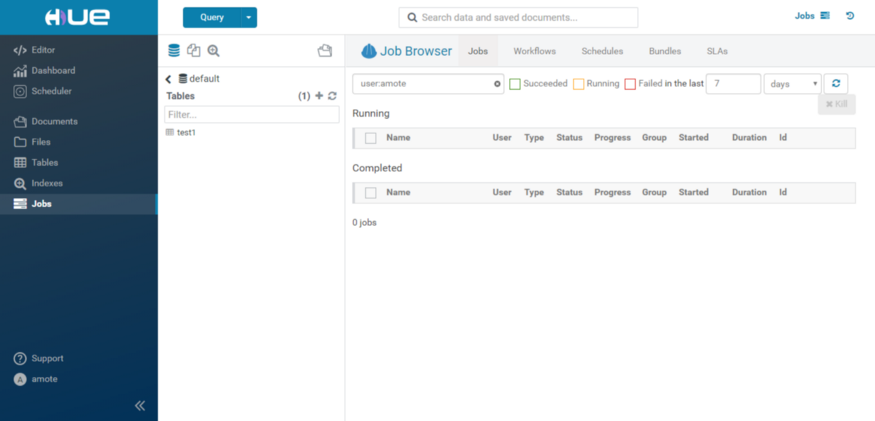

Job Browser

Job Browser

b. Hive service

For Hue to integrate with Hive:

a) I made the below configuration change in hive service : hive.server2.transport.mode=http The transport mode is binary by default.

b) Open the hue configuration file and update the below configuration : [beeswax] hive_server_host=<HIVE_SERVER_HOSTNAME> hive_server_port=10001 hive_discovery_hs2 = true hive_discovery_hiveserver2_znode = /hiveserver2 hive_conf_dir=/etc/hive/conf server_conn_timeout=120 use_get_log_api=false max_number_of_sessions=2 [zookeeper] [[clusters]] [[[default]]] host_ports=<ZK_HOST1>:2181,<ZK_HOST2>:2181,<ZK_HOST3>:2181

You can get the above string from Ambari under Hive service as well.

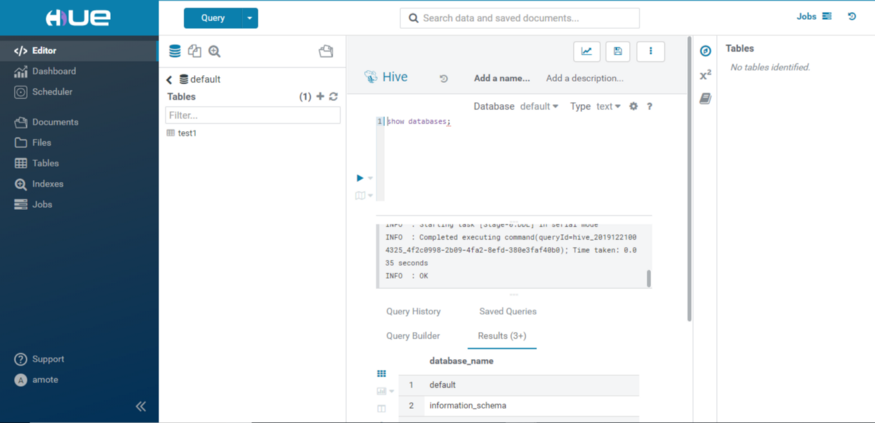

Hue Hive Query

Hue Hive Query

Learn more about bucket map join in Hive with our blog here.

c. HDFS service

In our cluster, we have HDFS high availability configured. So we can not just put one NameNode entry in hue configuration as it does not redirect the request to an active NameNode. For this to work, you have to configure Hadoop-HttpFS service.

[hadoop] [[hdfs_clusters]] [[[default]]] fs_defaultfs=hdfs://<ADDRESS_OF_NAMENODE> webhdfs_url=http://<HOSTNAME_hadoop-httpFS>:14000/webhdfs/v1/

You can get ADDRESS_OF_NAMENODE from fs.defaultfs property in the advanced core-site file.

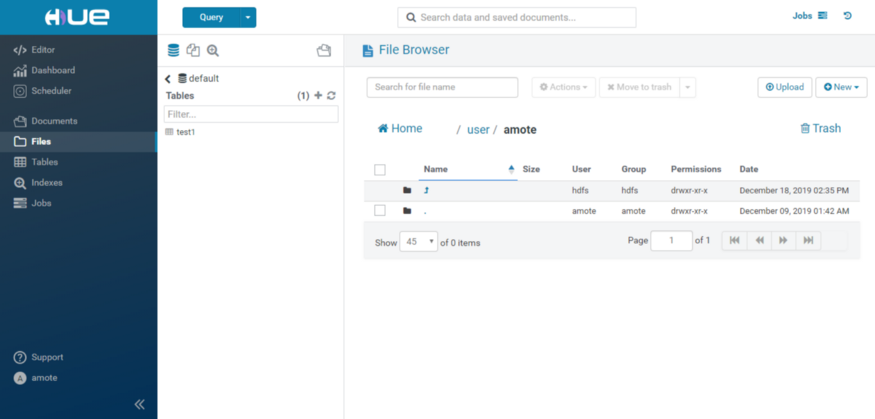

Hue File Browser

Hue File Browser

Below are frustrating moments which might test your patience if not done properly:

-

Dependencies during build from source. You may have to install a few other dependent packages other than the ones mentioned above.

-

Make sure the “hue” user is created on the hue server system.

-

Hive service transport mode. Initially, I spent a lot of time making it work for the binary mode, but I was not lucky.

-

Install the Hadoop-HttpFS service in case you have the HDFS HA setup

-

Enable user impersonation for hue. If these configurations are not present, impersonation will not be allowed, and the connection will fail

As Hortonworks does not officially support it, the hue process can not start automatically as part of the HDP stack. So I have written a systemctl script for hue and Hadoop-HttpFS service.

d) Hue service

-

Create hue.service in /etc/systemd/system directory :

[Unit] Description = Start Hue service After = network.target [Service] Type=simple Restart=always ExecStart = /home/hue/hue/build/env/bin/supervisor -d [Install] WantedBy = multi-user.target

-

systemctl enable hue.service

-

systemctl daemon-reload

-

Now you can start, stop and check the status of hue service using the systemctl command

e) hadoop-httpFs service

-

Create hadoop-httpfs.service in /etc/systemd/system directory :

[Unit] Description = Start Hadoop HttpFS service After = network.target [Service] Type=forking Restart=always User=hdfs ExecStart = /bin/hdfs — daemon start httpfs [Install] WantedBy = multi-user.target

-

systemctl enable hadoop-httpfs.service

-

systemctl daemon-reload

-

Now you can start, stop and check the status of Hadoop-HttpFS service using the systemctl command

To get the best data engineering solutions for your business, reach out to us at Clairvoyant.