Getting things done and keeping in touch with friends and family has never been easier.

Well promoted by Google, what can be more beautiful than imitating our intelligence to better our lives? Good Work!

Artificial Intelligence is trending and the world of artificially intelligent assistants is growing — Siri, Cortana, Alexa, Ok Google, Facebook M, Bixby — are some available by known tech leaders. Voice-enabled applications are in a lot of action recently with voice-activated speaker devices likely to get more user acceptance. It is significant to explore this ecosystem early enough to help create the optimum voice experiences as the field matures.

In this post, we will be getting to know specifically about Google Home and API.AI, a platform to build conversational assistant powered by NLP and Machine learning.

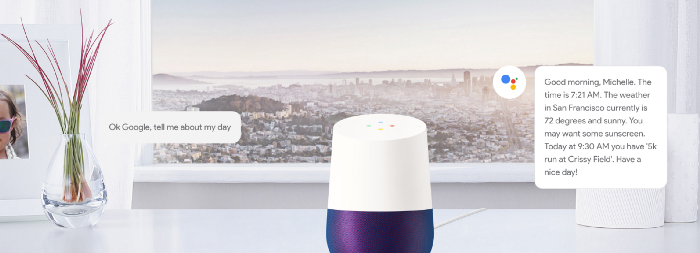

Google Home is voice-activated speaker device powered by the Google Assistant.

Ask it questions.

Tell it to do things.

And with support for multiple users, it can distinguish our voice from others in your home so we get a more personalized experience. However, it is fascinating to realize that it’s quite easy to build our own AI assistant too, customize it to our own needs, our own IoT connected devices, our own custom APIs. The sky’s the limit.

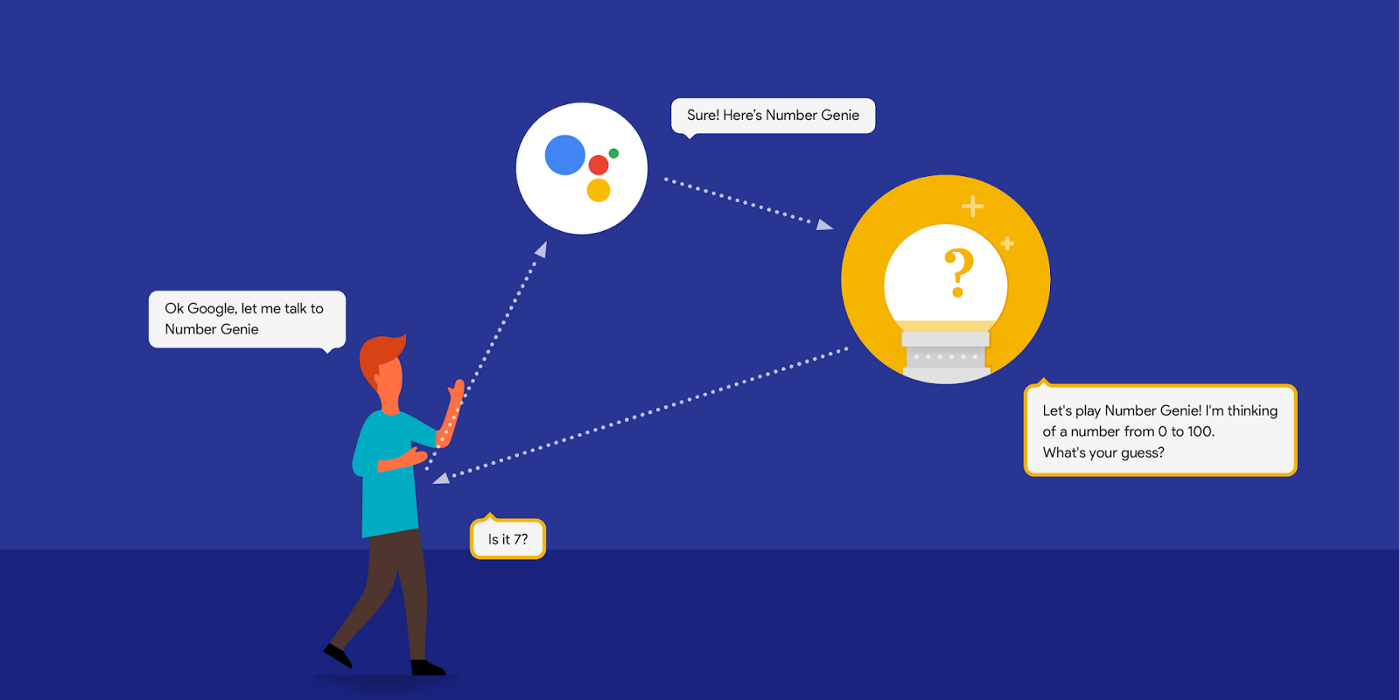

Google opened up the Google Assistant platform for developers in December and currently, the platform supports building out Conversation Actions for the Google Home device. It is widely expected that the same Actions will eventually be available across Google’s other devices and applications.

Image credits: Google home

Image credits: Google home

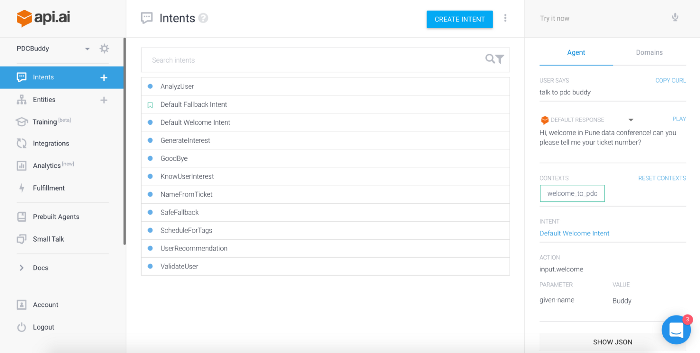

api.ai (formerly Speaktoit) is a developer of human–computer interaction technologies based on natural language conversations. It provides conversational user experience platform enabling brand-unique, natural language interactions for devices, applications, and services. Developers can use API.AI services for speech recognition, natural language processing (intent recognition and context awareness), and conversation management to quickly and easily differentiate their business, increase customer satisfaction and improve business processes.

It is acquired by Google in September 2016, it provides tools to developers building apps (“Actions”) for the Google Assistant virtual assistant.

How do they do it?

Image credits: Google

Image credits: Google

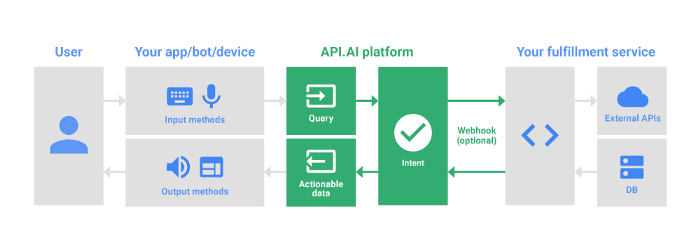

To build chatbots or conversation assistant, one of the first things to consider is conversation workflow management. It’s the layer in your bot stack that handles all your natural language processing needs. Whenever a user types or talks something to bot, you need a good conversation workflow management tool to help you deal with the messiness of human verbal communication.

api.ai provides us with such a platform which is easy to learn and comprehensive to develop conversation actions. It is a good example of the simplistic approach to solving complex man to machine communication problem using natural language processing in proximity to machine learning.

Some key concepts:

Agents, NLU (Natural Language Understanding) modules for applications. Their purpose is to transform natural user language into actionable data and can be designed to manage a conversation flow in a specific way. Agents are platform agnostic. You only have to design an agent once and then can integrate it with a variety of platforms using our SDKs and Integrations, or download files compatible with Alexa or Cortana apps.

Machine Learning, allows an agent to understand user inputs in natural language and convert them into structured data, extracting relevant parameters. In the API.AI terminology, the agent uses machine learning algorithms to match user requests to specific intents and uses entities to extract relevant data from them. The agent learns from the data you provide in it (annotated examples in intents and entries in entities) as well as from the language models developed by API.AI. Based on this data, it builds a model (algorithm) for making decisions on which intent should be triggered by a user input and what data needs to be extracted. The model is unique to the agent. The model adjusts dynamically according to the changes made in agent and in the API.AI platform. To make sure that the model is improving, the agent needs to constantly be trained on real conversation logs.

Intent represents a mapping between what a user says and what action should be taken by software.

Entity represents concepts and serves as a powerful tool for extracting parameter values from natural language inputs. The entities that are used in a particular agent will depend on the parameter values that are expected to be returned as a result of agent functioning.

API.AI relation to other components & process flow — Image credit

API.AI relation to other components & process flow — Image credit

Getting to know api.ai and getting started …

Easy to Learn:

“Ok Google. Let’s get started with API.AI”

These videos overview and tutorials help to get acquainted with this platform. It is useful to get the understanding of the platform and its intent not just in development perspective but how it all impact daily life and can be used to drive the development in a good direction.

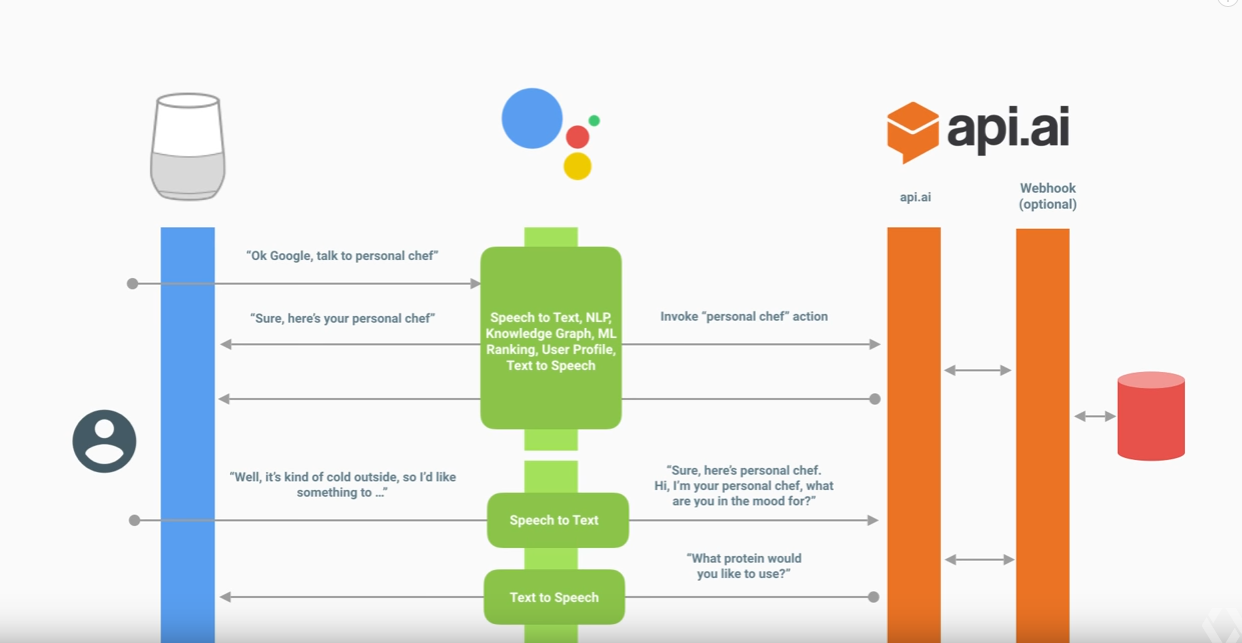

It will be helpful to think about the conversational flow that we are expecting to happen, maybe get pen and paper, draw it, then include the components accordingly to build that flow using API.AI.

Conversation workflow with Google home, Google assistant, and api.ai — Image credits: Google

Conversation workflow with Google home, Google assistant, and api.ai — Image credits: Google

Easy to Develop:

“Ok Google. How to develop conversation app quickly?”

This five-step development guide help to get hands-on experience with simple conversation assistant, like from building concept, development, and its integration.

Developer Console: Design, test, tune — all at one place

Developer Console: Design, test, tune — all at one place

Easy to Test:

“Ok Google. How to preview and test actions?”

Google provides a web simulator which lets us preview actions that built in API.AI or the Actions SDK in an easy-to-use interface with debugging and voice input. It helps we make sure that Conversation Actions actually sound conversational and let us use the device without having the hardware. In addition to the simulator, we can also test on an actual device by launching a preview version of actions built.

Easy to Deploy:

“Ok Google. Let’s deploy the conversation action.”

After setting up Actions on Google integration, we can deploy agent so that it is live and available for use by other users. We just need to first set up a Google App Engine project and register Conversation Action with Google.

So what are you waiting for? imitate some intelligence and “Hey machines, let’s talk! ;)”

Here at Clairvoyant, we’ve had a lot of fun learning these new conversational interactions and looking forward to building some cool intelligence. This post should be enough to get your interest towards something newly intelligent, get you up and running on creating your own cool new services. If you have any questions, feel free to leave a comment below. While believing in improving lives through design and technology, would also like to hear you if you have something interesting to share on this.

Wish you a happy time imitating your intelligence into first Google Action and getting introduced to API.AI.

References:

- https://docs.api.ai/docs/videos