A guide to the installation and upgrade process for Confluent Schema Registry for Apache Kafka

Architecture

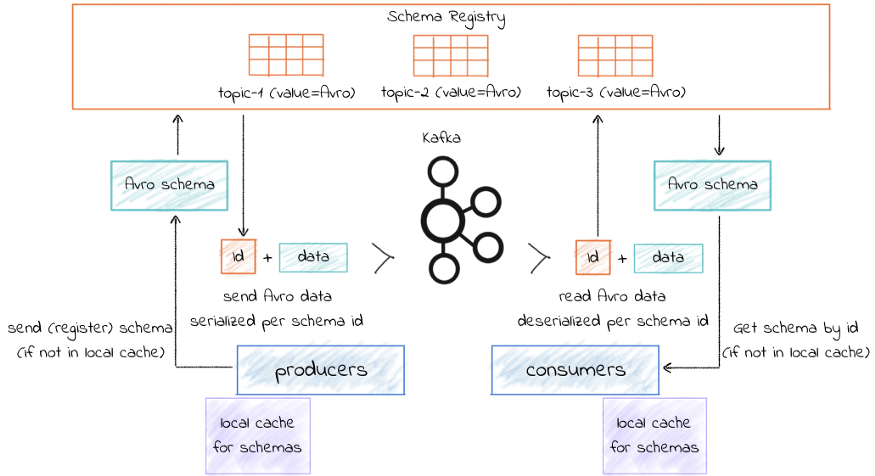

The Confluent Schema Registry provides a RESTful interface for storing and retrieving Apache Avro® schemas. It stores the versioned history of all schemas based on a specified subject name strategy, provides multiple compatibility settings, allows the evolution of schemas according to the configured compatibility settings and expanded Avro support. It provides serializers that plug into Apache Kafka® clients that handle schema storage and retrieval for Kafka messages that are sent in the Avro format.

The Schema Registry runs as a separate process from the Kafka Brokers. Your producers and consumers still talk to Kafka to publish and read data (messages) to/from topics. Concurrently, they can also talk to the Schema Registry to retrieve schemas that describe the data model for the messages.¹

Confluent Schema Registry for storing and retrieving Avro schemas

Confluent Schema Registry for storing and retrieving Avro schemas

Compatibility

Using the below table, you can identify which version of the Confluent Schema Registry you need to install based on the version of Apache Kafka you have installed:

For a more complete list of system requirements and compatibilities (including OS and Java versions supported) you can view:

Configuration Options

Let us understand important configuration options as part of setting up the Kafka Schema Registry.

Below details are pulled from the official documentation: link²

kafkastore.connection.url

ZooKeeper URL for the Apache Kafka® cluster

-

Type: string

-

Default: “”

kafkastore.bootstrap.servers

A list of Kafka brokers to connect to. For example, PLAINTEXT://hostname:9092,SSL://hostname2:9092

The effect of this setting depends on whether you specify kafkastore.connection.url.

If kafkastore.connection.url is not specified, then the Kafka cluster containing these bootstrap servers will be used both to coordinate Schema Registry instances (primary election) and store schema data.

If kafkastore.connection.url is specified, then this setting is used to control how Schema Registry connects to Kafka to store schema data and is particularly important when Kafka security is enabled. When this configuration is not specified, Schema Registry’s internal Kafka clients will get their Kafka bootstrap server list from ZooKeeper (configured with kafkastore.connection.url). In that case, all available listeners matching the kafkastore.security.protocol setting will be used.

By specifying this configuration, you can control which endpoints are used to connect to Kafka. Kafka may expose multiple endpoints that will be stored in ZooKeeper, but Schema Registry may need to be configured with just one of those endpoints, for example, to control which security protocol it uses.

-

Type: list

-

Default: []

listeners

Comma-separated list of listeners that listen for API requests over either HTTP or HTTPS. If a listener uses HTTPS, the appropriate SSL configuration parameters need to be set as well.

-

Type: list

-

Default: “http://0.0.0.0:8081"

avro.compatibility.level

The Avro compatibility type. Valid options:

-

none — New schema can be any valid Avro schema

-

backward —default — New schema can read data produced by the latest registered schema.

-

backward_transitive — New schema can read data produced by all previously registered schemas

-

forward — Latest registered schema can read data produced by the new schema

-

forward_transitive — All previously registered schemas can read data produced by the new schema

-

full — New schema is backward and forward compatible with latest registered schema

-

full_transitive — New schema is backward and forward compatible with all previously registered schemas

-

Type: string

-

Default: “backward”

kafkastore.topic

The durable single partition topic that acts as the durable log for the data. This topic must be compacted to avoid losing data due to the retention policy.

-

Type: string

-

Default: “_schemas”

kafkastore.topic.replication.factor

The desired replication factor of the schema topic. The actual replication factor will be the smaller of this value and the number of live Kafka brokers.

-

Type: int

-

Default: 3

Install the Schema Registry

1. Decide on the version of the Schema Registry

Use the Compatibility section above to identify which version of the Schema Registry you would like to install. In the below steps, replace the {CONFLUENT_VERSION} placeholders with your desired version. Example: `5.1`.

2. Import RPM Keys

$ rpm --import

https://packages.confluent.io/rpm/{CONFLUENT_VERSION}/archive.key

Note: Replace {CONFLUENT_VERSION} with your desired version of Schema Registry

3. Create the Confluent Yum Repository

-

Create the /etc/yum.repos.d/confluent.repo file and open it up in a text editor

-

Paste in the following content, replace the {CONFLUENT_VERSION} placeholder with your desired version of Schema Registry

3. Save and quit

4. Install the Schema Registry

$ yum install confluent-schema-registry -y

5. Configure the Schema Registry

-

Open the/etc/schema-registry/schema-registry.properties file in an editor and update either the ZooKeeper or Kafka Broker information.

-

Update any other desired configurations (See the Configuration Options section above for more details on what properties to update).

6. Start Schema Registry

Managing the Schema Registry

-

Start Schema Registry

$ systemctl start confluent-schema-registry

-

Stop Schema Registry

$ systemctl stop confluent-schema-registry

-

Restart Schema Registry

$ systemctl restart confluent-schema-registry

-

Get Status of Schema Registry

$ systemctl status confluent-schema-registry

Upgrading the Schema Registry

In the event where you need to upgrade Kafka, you will also need to upgrade the Schema Registry to a compatible version. You can follow these steps:

Note: Ensure that Kafka is upgraded before starting.

1. Decide on the version of the Schema Registry

Use the Compatibility section above to identify which version of the Schema Registry you would like to install. In the below steps, replace the {CONFLUENT_VERSION} placeholders with your desired version. Example: `5.1`.

2. Stop the Schema Registry

$ systemctl stop confluent-schema-registry

3. Backup Config File

Backup all properties files under /etc/schema-registry/

$ cp -r /etc/schema-registry /tmp/schema-registry-backup

4. Uninstall the Schema Registry

$ yum remove confluent-schema-registry

5. Update Confluent Yum Repository

-

Open the /etc/yum.repos.d/confluent.repo file in a text editor

-

Update the {CONFLUENT_VERSION} with your desired version of the Schema Registry.

3. Save and quit

6. Install New Version of Schema Registry

$ yum install confluent-schema-registry -y

7. Restore Configurations

Restore the backed-up configuration files into /etc/schema-registry/ directory.

$ mv /etc/schema-registry /etc/schema-registry-backup $ cp -r /tmp/schema-registry-backup /etc/schema-registry

8. Restart Kafka Schema Registry

$ systemctl start confluent-schema-registry

Validating the Schema Registry

Once the Schema Registry is installed and configured, we can validate it’s running as expected with the bellow steps:

Note: Scripts for creating and managing sample schemas are available in the below page. Typically schemas are created programmatically.

https://docs.confluent.io/home/overview.html

1. Define a Schema

Schema can be defined using JSON. File Name order.json

order.json

2. Script to Register Schema³

Run a cURL command to register the schema.

Here a Python script which will convert the Schema JSON into a single line and use cURL to register it:

register_schema.py

You can run the above script with the following command:

python register_schema.py http://{kafka-schema-registry-host}:8081

order-avro order.json

Note: Replace the {kafka-schema-registry-host} placeholder

Listing Schemas

We can confirm that the Schema was registered by calling the following cURL commands:

curl -X GET http://<kafka-schema-registry-host>:8081/subjects curl -X GET http://<kafka-schema-registry-host>:8081/schemas/ids/1 curl -X GET http://<kafka-schema-registry-host>:8081/subjects/order -avro-value/versions/1

[1] Confluent Schema Registry — https://docs.confluent.io/current/schema-registry/index.html

[2] Confluent Schema Registry Configurations — https://docs.confluent.io/current/schema-registry/installation/config.html

[3] Validation Reference — https://gist.github.com/aseigneurin/5730c07b4136a84acb5aeec42310312c

To get the best data engineering solutions for your business, reach out to us at Clairvoyant.