What is Dremio?

Dremio is a Data-as-a-Service Platform.

-

It is built on Apache Arrow, Apache Parquet, and Apache Calcite.

-

It reads data from any source into Arrow buffers for in-memory processing.

-

It has its own SQL engine called Sabot for executing queries or augmenting the abilities of the underlying data source (eg, Elastic doesn’t support JOIN, so Dremio pushes down what it can and processes other query fragments in Sabot).

-

Data Reflections persist as Parquet files on a file system — HDFS, S3, ADLS, NAS.

-

It has a highly optimized, vectorized Parquet reader, and Parquet is it’s preferred format at this time.

-

It is a distributed process. You can run it in containers, on bare metal, or as a YARN native app in your Hadoop cluster. You can scale up/down as needed.

-

It connects to sources in different ways depending on the source: RDBMS via JDBC; MongoDB via java driver; Elasticsearch via their driver; files like Parquet and JSON using its own readers; For Hive, it picks up schema from Hive Metastore and use its own file readers; etc.

-

Its UI is intended for data curation, searching the catalog, admin, etc. It expects users to issue queries using their favorite tools over ODBC/JDBC/REST.

Cluster Deployment

Overview

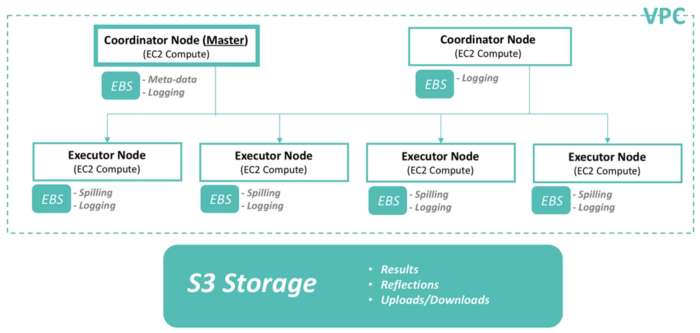

Cluster overview, © Dremio (https://www.dremio.com)

Cluster overview, © Dremio (https://www.dremio.com)

System Requirements

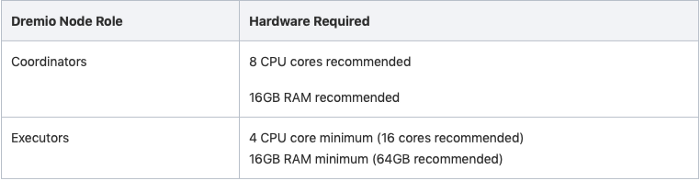

Hardware Required

Hardware Required

Linux Distributions

Dremio supports the following distributions and versions of Linux:

-

RHEL and CentOS 6.7+ and 7.3+

-

SLES 12 SP2+

-

Ubuntu 14.04+

-

Debian 7+

Network

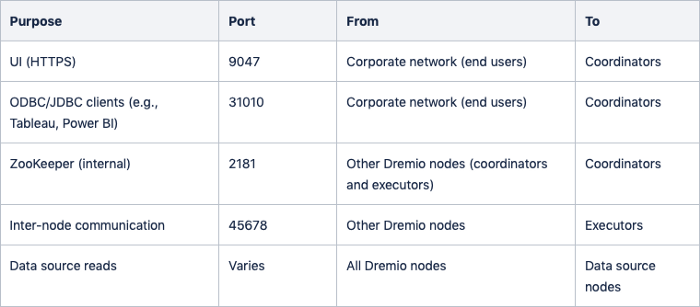

The following ports must be open to either access the Dremio UI or for communication between nodes:

Ports to be opened

Ports to be opened

Server System

Dremio can be deployed on Linux, Windows, and Mac. For production deployments, Linux is the recommended operating system.

We have chosen RHEL-7.5 as an RPM package is available for it. A tarball package is also available for other Linux distributions. However, it takes more effort to set up using a tarball package.

Java Development Kit

Java SE 8 is required for running Dremio. Please NOTE as of Dremio v3, higher versions of Java are NOT supported.

Please check if the server has Java installed and its versions.

$ java -version

If Java is not present or an earlier version is installed, please follow the instructions below to install Java SE 8.

For installing Java SE 8 of RHEL, follow steps:

-

Download JDK from Oracle website

$ wget — no-cookies — no-check-certificate — header “Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept- securebackup-cookie” http://download.oracle.com/otn- pub/java/jdk/8u191-b12/2787e4a523244c269598db4e85c51e0c/jdk-8u191- linux-x64.rpm

-

Install JDK

$ sudo yum localinstall jdk-8u191-linux-x64.rpm

Installation and Configuration

Installation

-

Download RPM distribution from here.

$ wget https://download.dremio.com/community-server/3.0.0- 201810262305460004-5c90d75/dremio-community-3.0.0- 201810262305460004_5c90d75_1.noarch.rpm

-

Install using RPM

$ sudo yum install dremio-<VERSION>.rpm

-

Enable Dremio to start at machine startup.

$ sudo chkconfig — level 3456 dremio on

-

Starting/Stopping Dremio

// Starting $ sudo service dremio start // Stopping $ sudo service dremio stop

Configuration

Master node

#

# Copyright (C) 2017-2018 Dremio Corporation

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

paths: {

# the local path for dremio to store data.

local: "/var/lib/dremio"

# the distributed path Dremio data including job results, downloads, upload

s, etc

#dist: "pdfs://"${paths.local}"/pdfs"

#dist: "s3a://fe-dremio-storage/dremio-storage"

}

services: {

coordinator.enabled: true,

coordinator.master.enabled: true,

executor.enabled: false

}

zookeeper: "<master_node_ip>:2181"

Executor nodes

# # Copyright (C) 2017-2018 Dremio Corporation # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # paths: { # the local path for dremio to store data. local: "/var/lib/dremio" # the distributed path Dremio data including job results, downloads, upload s, etc #dist: "pdfs://"${paths.local}"/pdfs" #dist: "s3a://fe-dremio-storage/dremio-storage" } services: { coordinator.enabled: false, coordinator.master.enabled: false, executor.enabled: true } zookeeper: "<master_node_ip>:2181"

This configuration assumes embedded Zookeeper is running on Master node.

Verifying the installation

Open a browser and go to <master_node_ip>:9047. It will ask to create an admin User for the first time.

Once logged in, Click on the Admin button (at the top-right of the page). It will show Node Activity under Cluster section.

Each node’s hostname or IP address should be listed, along with a green status light.

Distributed Storage

By default, Dremio uses the disk space on local Dremio nodes(usually /var/lib/dremio ) to store accelerator, CREATE TABLE AS tables, job result, download and upload data. We can indicate a different store such as NAS, HDFS, MapR-FS, S3 or ADLS.

Amazon S3

Before configuring Amazon S3 as Dremio’s distributed storage, test adding the same bucket as a Dremio source and verify the connection.

-

dremio.conf changes:

paths: { ... dist: "s3a://<BUCKET_NAME>/<STORAGE_ROOT_DIRECTORY>" }

-

Create core-site.xml and include IAM credentials with list, read and write permissions:

<?xml version="1.0"> <configuration> <property> <name>fs.s3a.access.key</name> <description>AWS access key ID.</description> <value>ACCESS KEY</value> </property> <property> <name>fs.s3a.secret.key</name> <description>AWS secret key.</description> <value>SECRET KEY</value> </property> </configuration>

Copy core-site.xml to under Dremio's configuration directory (same as dremio.conf) on all nodes.

Once done, please restart the cluster.