What is Compression, and why do we need Compression?

Nowadays, Convolutional Neural Networks are used extensively for Image Classification, Facial and Object Recognition, Medical Imaging Analysis, etc. Also, there are applications like Self Driving Cars, where predictions are desired in real-time with low latency. The current neural network architectures have millions of parameters, and it becomes a problem to deploy such heavy models on IoT devices and other edge devices with memory restrictions. Compression Techniques would help us solve these problems by reducing the size of CNN models obtained by minimizing the number of parameters that help to reduce the complexity of these models. With fewer parameters, the inference time for predictions would be lesser than the original Convolutional Neural Networks model. The training time for CNN models will be lesser than the original models.

Clairvoyant AI, Inc is at the forefront of harnessing the power of data to reap actionable insights for our clients through AI-, ML-, and related data solutions. In this blog, we will be looking at different compression techniques for Convolutional Neural Networks that will help simplify the Convolutional Neural Networks architecture while delivering a similar performance so that it is possible to deploy these CNNs on hardware-constrained devices like IoT devices.

Types of Compression

There are 2 types of compression:

Pruning

Pruning is the process of removing the least important features from the convolutional layers in the CNN model. Pruning induces sparsity, which means the mentioned percentage of weights or filters are set to zero. Pruning are of two types: Structured Pruning and Unstructured Pruning. Unstructured Pruning removes each or individual weight corresponding to a neuron, whereas Structured Pruning removes kernel filter/filters entirely. In PyTorch, the prune.ln_structured() module helps us achieve this by reducing the weights of the least important features to zero based on the feature ranking system. The ranking is based on the L1/L2 norm. This forces the remaining weights/features to learn additional generalized features rather than class-specific features and also helps in reducing the number of parameters. Since the weights are reduced to zero, it results in a sparse network and a sparse network helps to achieve less latency. The number of parameters is currently reduced in the PyTorch module, but the parameter file is twice the size because PyTorch stores the metadata associated with the model.

Quantization

Generally, all the weights of Convolutional Neural Networks models are stored as float32 type, that is, 32-bit floating-point numbers. Quantization is a technique that converts float3class="small-image-on-blog"2 to 16 bits or 8 bits. As per the survey paper, there has been research about quantizing to only 1 bit (binarization of weights), but it leads to a significant performance drop.

In this data compression technique, we convert the model’s weights, rescale, and normalize it from float32 to int8 type, which drastically reduces the model’s file size. The weight parameters are in the range of [0,255] because of the unsigned int8 data type. There are two types of quantization techniques: Post Training Quantization and Quantization Aware Training. Post Training Quantization does not involve retraining of the models, whereas the latter one involves retraining. For post-training quantization, we only need the original trained uncompressed CNN model.

We will be exploring two types of compression techniques in this blog: Pruning and Quantization.

Dataset and CNN Architecture

The dataset that we will be using here is the Street View House Numbers (SVHN) dataset and a five-layer custom CNN architecture similar to AlexNet. The SVHN dataset consists of 10 classes for integers from 0 to 9. It has three channels and has about 70,000 training images and 26,000 test images. This dataset is available in PyTorch datasets.

Compression Implementation using PyTorch

To implement compression using TensorFlow, please refer to the following links for pruning here and quantization here.

Now, let’s walk through the code on how to implement these compression techniques using the PyTorch library:

NOTE: This code has been run on CPU and not GPU.

Import the Libraries and Packages

Loading the Dataset

Define the CNN Model

The following CNN architecture is similar to the AlexNet architecture:

Train and Test the Original CNN Model

The first part consists of training the model and the second part consists of testing the trained model:

Test the Original Model

Train and Test the Pruned Model

We will be using the prune.ln_structured() from the PyTorch module to prune the original model.

The above code prunes each convolution and fully connected layer in the custom CNN model defined above. The pruning percentage mentioned is 30%. The ‘dim’ parameter decides which channel to prune. Pruning induces sparsity, which means 30% of weights (channels) are set to zero. Once this has been set to zero, the retraining is performed for the remaining 70% of the weights to learn as many generalized patterns as possible.

Now, we can use the same code of “for loop” in Step 4 to train the pruned model and save the weights in another file.

Followed by training the pruned model, now for testing, we can use the same code that was used for testing the original model.

Evaluate the Quantization Model

The torch.quantization.quantize_dynamic() module in PyTorch helps us to convert the original model to a quantized model. We provide the original model as the input and the type of layers we want to quantify. In this case, we have mentioned Conv2D and Linear layers. The output of the quantized model is detached and converted to a NumPy array in the below code.

DataParallel Module to Support Training of Models on Multiple GPUs

The dataset considered here is relatively smaller. The novel CNN architectures and new models are always tested on complex and larger benchmark datasets like ImageNet that consists of about one million images. In order to train on such a dataset, we need to have access to GPUs or the training of models can take a lot of days. PyTorch has a DataParallel module that can be used if we want to train the CNN models on multiple GPUs. It is a wrapper around the CNN model and is easy to implement. It can be implemented with the following code:

In the above code snippet, we are using 1,6,7,8 as the GPU IDs. It can be any of the GPU IDs that are free for computations. With access to multiple GPUs, we can increase the batch size, which in turn helps to speed up the training time of the models.

Evaluation Metrics

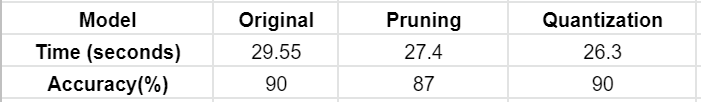

The success of these compression techniques would be determined by prediction time and accuracy. The original model achieved 90% accuracy, the pruned model achieved 87% accuracy, and the quantized model achieved 90% accuracy. The compressed model achieves similar accuracy (<5% drop) compared to the original uncompressed model and consumes less space to store the parameters, and takes less time to generate the expected output.

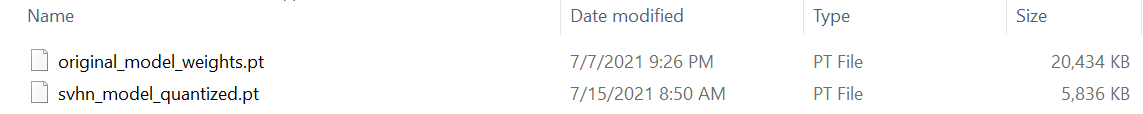

It can be seen from the output of the above code snippets that the original model takes about 29.5 seconds to generate the output of approximately 26,000 testing images. However, the pruned model takes about 27.4 seconds and the quantized model takes 26.3 seconds. Also, it can be seen that the accuracy for the pruned and quantized models is similar to the original model. There is very little drop in model performance compared to the original model. The weights parameter file for the quantized model is 1/4th of the size of the original model, which can be seen in the image below. The above-demonstrated numbers are specific to individual hardware and are currently based on CPU.

NOTE: If a GPU or multiple GPUs are used for training and testing, it will be much faster.

Here is the model comparison and summary table :

Conclusion

We have implemented and evaluated pruning and quantization as compression techniques in our AI solutions. These compression techniques are still being actively researched to make them more optimal. However, it is to be noted that the performance of these CNN models is also dependent on the underlying hardware and can affect the performance. In applications like self-driving cars, we have to make a trade-off between model performance and model complexity, that is, if the model precision is of utmost importance, we should use the original model without compressing them. If the deployment of a model in limited space is necessary, then the compressed model can be used.

References:

- 1. Y. Cheng, D. Wang, P. Zhou, and T. Zhang, “A Survey of Model Compression and Acceleration for Deep Neural Networks,” pp. 1–10, 2017. [Online]. Available: http://arxiv.org/abs/1710.09282

- 2. An Overview of Model Compression Techniques for Deep Learning in Space

- 3.Pruning Tutorial — PyTorch Tutorials 1.9.0+cu102 documentation

- 4. Quantization — PyTorch 1.9.0 documentation

- 5.Pruning in Keras example

- 6. Post-training integer quantization | TensorFlow Lite now supports converting all model values